Transcloud

August 12, 2024

August 12, 2024

Hi folks,

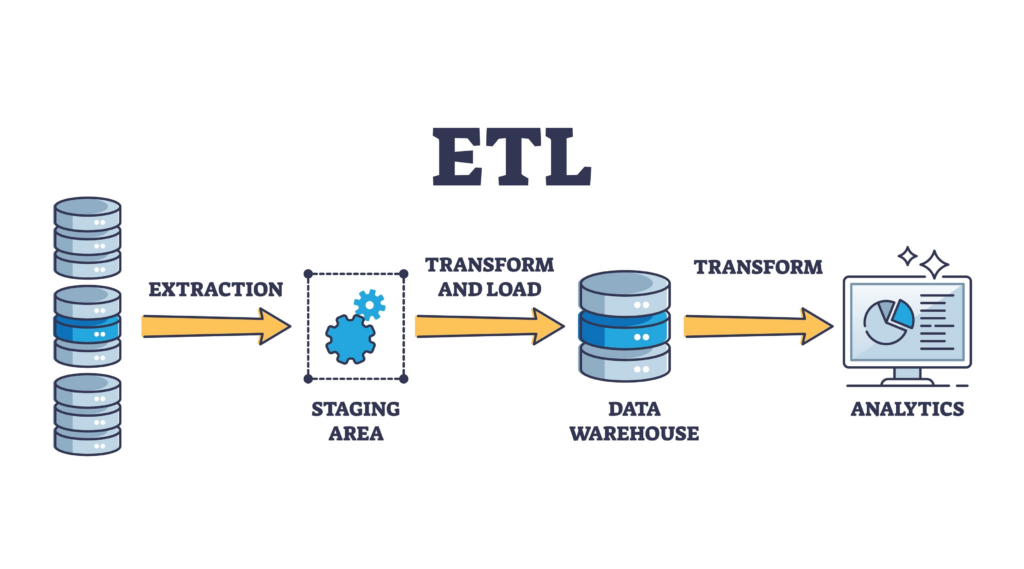

If you’ve stepped into the vast world of Data Analytics and Data Engineering, I bet you’ve encountered the term ETL (Extract, Transform, Load). ETL is that crucial process that allows us to turn raw data into actionable insights, acting as the backbone for data analytics and business intelligence.

However, as our data grows in volume and variety, optimizing these ETL processes becomes not just beneficial but essential for efficient data transformation. Why, you ask? Because optimized ETL processes mean faster insights, lower costs, and happier data teams! So, if you’re looking to get the most out of your GCP setup, you’ve come to the right place.

Let’s dive into the best practices to enhance your ETL processes for seamless data transformation. Ready to transform your data game?

Let’s get started!

Understanding ETL Processes on Google Cloud Platform

ETL, or Extract Transform Load, is a foundational process in the realm of data handling and analytics. Its significance lies in its ability to efficiently manage and prepare data for insightful analysis.

At its core, ETL encompasses three key stages:

The transformation phase of ETL is particularly critical as it serves to harmonize and optimize the data for analysis. By standardizing formats, resolving inconsistencies, and enriching with additional context, businesses can ensure that their data is accurate, reliable, and actionable.

Moreover, ETL enables organizations to unify data from disparate sources, bringing together information from databases, cloud services, IoT devices, and more. This consolidation ensures that data is centralized and readily accessible for analytical purposes, facilitating informed decision-making and driving business insights.

In essence, ETL is the backbone of modern data analytics, empowering organizations to harness the full potential of their data assets. By effectively managing the extraction, transformation, and loading of data, businesses can unlock valuable insights, drive innovation, and gain a competitive edge in today’s data-driven landscape.

Overview of Google Cloud Platform (GCP)

Google Cloud Platform (GCP) is a comprehensive suite of cloud computing services built on the same infrastructure that powers Google’s renowned consumer products such as Google Search, Gmail, and YouTube. Leveraging Google’s vast infrastructure and expertise, GCP offers a wide array of services tailored to meet the diverse needs of modern businesses.

One of the key strengths of GCP lies in its robust solutions for data storage, computing, and analytics. Organizations can leverage GCP’s scalable infrastructure to store and process vast amounts of data efficiently. For data storage, GCP offers services like Google Cloud Storage, providing secure and durable object storage that can accommodate data of any size, from small files to massive datasets.

In terms of data processing and analytics, GCP provides sophisticated tools such as BigQuery for data warehousing, which enables organisations to analyse enormous datasets using lightning-fast SQL queries.

Additionally, services like Dataflow enable organizations to build and execute data pipelines for ETL (Extract, Transform, Load) processes, ensuring seamless data processing at scale. Moreover, Pub/Sub provides a reliable and scalable messaging service for real-time data streaming, facilitating the ingestion and processing of streaming data from various sources.

Overall, GCP’s suite of services for data storage, computing, and analytics is designed to be highly scalable and flexible, empowering organizations to harness the full potential of their data assets. Whether dealing with small-scale datasets or petabytes of information, GCP provides the tools and infrastructure needed to handle data efficiently and derive valuable insights to drive business growth and innovation.

Benefits of efficient data transformation

Optimizing ETL processes on GCP not only ensures that data is accurately and quickly transferred but also brings a multitude of benefits to the table.

Efficient data transformation can:

Optimized ETL processes thus serve as a backbone for data-driven decision-making, facilitating smoother operations and better strategic planning.

Impact on business operations

The ripple effect of well-optimized ETL processes on business operations cannot be overstated. First and foremost, it allows for real-time or near-real-time analytics, which is critical in today’s fast-paced business environment. This immediacy in data processing enables businesses to react swiftly to market trends, customer behaviors, and operational inefficiencies. Furthermore, by ensuring data integrity and consistency, businesses can rely on their analytics for making significant operational, financial, and strategic decisions. In effect, optimized ETL processes not only streamline data handling but fundamentally transform how businesses leverage data, driving efficiency, innovation, and a competitive edge in the marketplace.

Best Practices for Optimizing ETL on Google Cloud Platform

In the realm of data analytics, the efficiency of ETL (Extract, Transform, Load) processes can significantly impact the speed and quality of insights derived from data. Google Cloud Platform (GCP) offers a robust array of tools designed to streamline and optimize these processes. Let’s explore some of the best practices to enhance your ETL workflows on GCP.

Utilizing Google Cloud Dataflow for scaling

Google Cloud Dataflow is a fully managed service that simplifies the complexities of stream and batch data processing. By adopting Dataflow, you can automatically scale your ETL jobs both up and down, thus optimizing resource utilization and minimizing costs. Dataflow’s key advantage is its ability to dynamically adjust computing resources based on the workload, ensuring that your ETL processes are neither under nor over-resourced. Additionally, Dataflow integrates seamlessly with other GCP services, providing a cohesive environment for data transformation.

Leveraging BigQuery for data warehousing

BigQuery, Google’s serverless, highly scalable data warehouse, plays a pivotal role in optimizing ETL processes. Its ability to execute fast SQL queries over large datasets significantly reduces the time required for data transformation and analysis. By leveraging the power of BigQuery, you can streamline the ‘Load’ phase of your ETL process, efficiently managing data ingestion and storage. Moreover, BigQuery’s built-in features, such as automatic data partitioning and clustering, further enhance query performance and reduce costs.

Automation with Cloud Composer

Repetitive and complex ETL jobs can benefit greatly from automation. Cloud Composer, a managed Apache Airflow service, facilitates this by orchestrating your workflows across various GCP services. With Cloud Composer, you can efficiently schedule and monitor ETL tasks, ensuring they run smoothly and efficiently. This not only saves valuable time but also increases the reliability of your data pipelines, as Cloud Composer handles dependencies, failures, and retries smartly.

Monitoring and Performance Tuning ETL Processes

Ensuring your ETL processes run at peak efficiency requires ongoing monitoring and performance tuning. Google Cloud Platform offers tools and features designed to provide insights into your ETL workflows, helping identify bottlenecks and opportunities for optimization.

Implementing monitoring tools on GCP

Google Cloud’s operations suite, formerly known as Stackdriver, is instrumental in monitoring the health and performance of ETL processes. It offers comprehensive logging and monitoring capabilities that allow you to track your Dataflow jobs, BigQuery query execution times, and Cloud Composer’s operational metrics. By analyzing these metrics, you can quickly identify issues and make necessary adjustments to your ETL workflows, ensuring they continue to run smoothly.

Tips for optimizing performanceOptimizing the performance of your ETL processes on GCP involves several strategies. Firstly, consider revisiting your data transformation logic in Dataflow to ensure it’s efficient and not duplicating work. Secondly, regularly evaluate your BigQuery queries and schemas for improvements—such as making use of partitioned tables to accelerate query execution and reduce costs.

Additionally, leverage BigQuery’s materialized views to pre-compute and store complex aggregations, which can drastically speed up query performance for repetitive analyses. Lastly, automate and monitor as much as possible with Cloud Composer and the operations suite to maintain optimal workflow efficiency.

By following these best practices for optimizing ETL on Google Cloud Platform and continually monitoring and tuning your processes, you can achieve highly efficient data transformation workflows that support faster, data-driven decision-making.

Security Considerations for ETL on GCP

When moving your ETL (Extract, Transform, Load) processes to the Google Cloud Platform (GCP), securing your data is a top priority. GCP offers robust security features, ensuring that your data transformation tasks are not only efficient but also secure. Let’s dive into some of the key security considerations you should be aware of.

Data encryption methods

GCP provides several data encryption methods to protect your data at rest and in transit. By default, all data stored on GCP is encrypted before it’s written to disk. This happens without any additional actions from you, utilizing Google-managed keys. Regardless, for those who require a higher level of control, GCP offers customer-managed keys through its Cloud Key Management Service (KMS). This allows you to manage the encryption keys yourself, giving you the flexibility to rotate, manage, and control your encryption keys according to your organization’s security policies.

Furthermore, when your data is moving between your site and the Google Cloud, or between different services within the cloud, GCP automatically encrypts this data in transit. This ensures that your sensitive ETL data remains secure as it moves across the internet and within Google’s network.

Role-based access control

Implementing role-based access control (RBAC) is crucial in securing your ETL processes on GCP. RBAC allows you to define who has access to what resources within your Google Cloud environment. By assigning roles to users or groups, you can control their access levels, ensuring that only authorized personnel can perform specific operations on your ETL data and processes.

GCP offers predefined roles with granular permissions designed for specific tasks and resources, such as BigQuery Data Editor or Dataflow Admin. Moreover, if the predefined roles don’t meet your specific needs, you can create custom roles, providing you with the flexibility to tailor permissions exactly as your project requires.

Case StudiesExploring real-life examples of optimized ETL processes on GCP can provide valuable insights and inspiration for your own projects. Let’s look at some success stories and learnings from organizations that have effectively utilized GCP for their ETL needs Know more

Success stories of optimized ETL processes on GCP

One notable success story comes from Spotify, which migrated its extensive data processing pipelines to GCP. By leveraging Google’s BigQuery for data transformation and analysis, Spotify achieved a significant reduction in the latency of data delivery, from hours to minutes. This improvement enabled Spotify to provide more timely, personalized music recommendations to its users.

Another example is PayPal, which utilizes GCP’s Cloud Dataflow for real-time data processing. This allowed PayPal to efficiently handle billions of transactions, performing complex ETL tasks that ensure data accuracy and timeliness. As a result, PayPal improved its fraud detection capabilities, providing a safer transaction environment for its users.

Learnings from real-world implementations

From these case studies, several key learnings emerge. First, it’s crucial to fully understand your data workflows and the specific requirements of your ETL processes before choosing the right tools and services on GCP. This ensures that you’re leveraging GCP’s capabilities most effectively.

Second, don’t underestimate the importance of scalability. GCP’s fully managed services, such as BigQuery and Dataflow, can scale automatically to handle peaks in data processing demand, which is crucial for maintaining performance and efficiency.

Lastly, continuously monitor and optimize your ETL processes. GCP provides tools like Cloud Monitoring and Cloud Logging, which can help you gain insights into your ETL pipelines and identify areas for improvement.

Let’ Wrap up!

When it comes to optimizing ETL processes on Google Cloud Platform (GCP), every step you take towards improvement not only streamlines your data transformation efforts but also empowers your organization with more accurate and timely insights.

Remember, optimization is not a one-time task but a continuous journey where you constantly seek better efficiency and performance. By ensuring your pipelines are well-monitored, leveraging the power of BigQuery, incorporating the flexibility of Cloud Dataflow, and always aiming for cost efficiency, you position your ETL processes on a trajectory towards unparalleled success.

In the world of data, seconds saved in processing can lead to valuable insights gained, and optimizing your ETL processes on GCP can significantly contribute to that goal. Keep experimenting with the myriad of tools and features offered by GCP, and don’t hesitate to adopt new practices that can enhance your data transformation operations. With diligence, creativity, and ongoing education, you can unlock the full potential of your data on GCP, transforming it into a true asset for your organization.